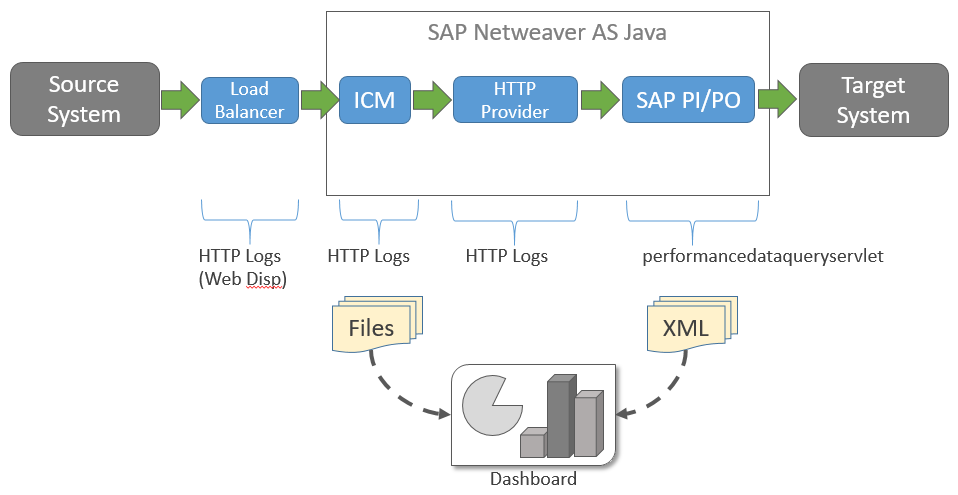

End-to-End performance analysis of a specific SAP PO/PI interface is a tricky business using the out-of-the-box tools. For example, you might be thinking that SAP Solution Manager can provide this detail, but it is not able to correlate inbound SAP Web Dispatcher HTTP logs or Netweaver Java ICM logs to the PI/PO interface.

Instead you need to implement your own monitoring to bring these components together, unless you implement a complete tool like AppDynamics.

In this post I will show how to extract the SAP PI/PO performance data and import into Excel, using a Power Query to transform it from the XML format into something that we can report on.

After seeing my example, you can take the same design away and, using PowerBI, construct a dashboard for reporting. You can also choose to incorporate the HTTP logs, to give you an the end-to-end performance analysis by interface.

This post is similar to another set of blog posts I published, showing how to pull data about your virtual machines directly from Azure, see: List Your Azure VMs in Excel. That other post used Power Query to transform JSON.

Data Sources

Before we go and start Excel, we need to understand where our data will be coming from.

There are 4 locations that we can report on in a SAP Netweaver AS Java stack running SAP PI/PO:

- SAP Web Dispatcher (load balancer across multiple App instances).

- SAP ICM (load balancer across multiple NW java server nodes).

- SAP NW HTTP Provider.

- SAP PI/PO performance data servlet.

The last item is the key one, which will require some Power Query goodness to transform the data.

We use the 2014 blog post from Vadim Klimov to see how to pull this data direct from PI/PO using the servlet “PerformanceDataQueryServlet”.

For the Web Dispatcher, the ICM and the HTTP Provider, we really need to choose just one of those points to collect the log information.

Since our source system is handing over processing to “us” at the Web Dispatcher, then that would be the logical location to collect the HTTP logs.

However, some companies use a load balancing appliance like an F5 at the entry-point, in which case, you would be better gathering the HTTP logs from each of the ICM server processes.

The reason for using the HTTP logs from the front-end of the architecture stack, is because you want to capture any HTTP 50x messages caused by unavailability of the back-end parts.

For example, if SAP Netweaver is down, then the Web Disp logs would show a HTTP 503 (service unavailable).

If the PO application is not started inside SAP Netweaver, then the ICM logs would show a HTTP 503.

You want to collect the logs from the closest point of the handover between your source system and the Netweaver stack.

For the HTTP log sources, we have a little bit of an issue.

In most cases, logging is not enabled in Web Dispatcher and ICM configurations. To enable the logging we need to look at the parameter “icm/HTTP/logging_<xx>“.

Ideally what we need for the log format is: %h %u %t “%r” %s %H %L

This will give:

- %h = Source IP.

- %u = BASIC Auth username or cert common name.

- %t = Date/time.

- %r = The request with query string !IMPORTANT!

- %s = The HTTP response code e.g. 200 or 500 etc.

- %H = The name of the server host.

- %L = Response time in milliseconds.

The log file should be switched DAILY (SWITCHF=day) to prevent it from growing too large.

We will need to transform the data in the log, but we can do this in Power Query or in a script at source.

Data Transfer

We now need to understand how we will transfer the data from the data source to Excel (or PowerBI).

Natively, Excel (and PowerBI) can query a HTTP target to obtain data in a variety of formats.

Since XML is supported with no problems, this enables us to call the PI/PO PerformanceDataQueryServlet directly from Excel.

For those feeling adventurous, the HTTP logs can actually be picked up by Azure Log Analytics. You may need to adjust the date/time format with a script, but then it will be possible to have them stored in your workspace for pulling into PowerBI.

Alternatively, you will need to place the HTTP logs into a storage location on a regular interval, somewhere accessible from Excel/PowerBI. This could be Sharepoint or an Azure Storage Account.

Another option is to have them placed into a location that serves HTTP, such as the SAP instance agent like I have shown before. For the Web Dispatcher you may have 2 logs (in an active/active setup) for the ICM you will have a log file for each Application server instance.

By naming the log files in an intelligent manner, you can ensure that your Power Query can always find the files (e.g. don’t include the date/time in the file name).

Data Aquisition

With your data accessible via HTTP, you can use Excel or PowerBI to process it.

In this example, we will go through the processing for the PerformanceDataQueryServlet, since that is the hardest to process in its raw XML format, with multiple nested tables. The nested tables is the reason we use Power Query to transform it.

Open Excel and create a new workbook, then select the “Data” tab:

Click “Get Data” and select “From Other Sources”, then click “Blank Query”:

Click “Advanced Editor”:

Remove any existing text from the query box:

At this point, we can paste in the code necessary to obtain our data, but first we need to understand the URL composition correctly.

For this we can refer to the 2014 blog post from Vadim Klimov to understand the required parameters.

Here’s my sample HTTP call:

https://sapts1app1:50001/mdt/performancedataqueryservlet?component=af.ts1.sapts1db01&begin=2021-01-10T00:00:00.000Z&end=2021-01-11T00:00:00.000Z

This can be broken down as follows:

| Entry | Value |

|---|

| Https://sapts1app1:50001 | Host address of PO App server (this can be any one of the Java instances). |

| /mdt/performancedataqueryservlet | URI for the PerformanceDataQueryServlet |

| component=af.ts1.sapts1db01 | The name of our AAEX |

| begin=2021-01-10T00:00:00.000Z | The begin time of our data selection period. |

| end=2021-01-11T00:00:00.000Z | The end time of our data selection period. |

Something you will notice about our URL is that we are using the HOURLY data selection period, listing data for a 24 hour period aggregated by hour.

We don’t really have much choice with the PerformanceDataQueryServlet, as we can only choose from MINUTE, HOURLY or DAILY with aggregation levels being 15mins, 1hour or 1day.

If we were to decide not to pull the data via HTTP, then we could save it to a flat file.

The data format that will be returned from the HTTP call could be pre-saved.

Here’s my sample data returned from the call to the PerformanceDataQueryServlet:

<?xml version="1.0" encoding="UTF-8" ?>

<PerformanceDataQueryResults xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:noNamespaceSchemaLocation="http://sapts1app1:50001/mdt/monitor/PerformanceDataQuery.xsd">

<Result>

<Code>OK</Code>

<Details>OK</Details>

<Text>Successfully read performance data.</Text>

<Component>af.ts1.sapts1db01</Component>

<PeriodType>DAILY</PeriodType>

<BeginTime timezone="UTC">2021-01-10 00:00:00.0</BeginTime>

<EndTime timezone="UTC">2021-01-11 00:00:00.0</EndTime>

</Result>

<Data>

<ColumnNames>

<Column>INBOUND_CHANNEL</Column>

<Column>OUTBOUND_CHANNEL</Column>

<Column>DIRECTION</Column>

<Column>DELIVERY_SEMANTICS</Column>

<Column>SERVER_NODE</Column>

<Column>FROM_PARTY_NAME</Column>

<Column>FROM_SERVICE_NAME</Column>

<Column>TO_PARTY_NAME</Column>

<Column>TO_SERVICE_NAME</Column>

<Column>ACTION_NAME</Column>

<Column>ACTION_TYPE</Column>

<Column>SCENARIO_IDENTIFIER</Column>

<Column>MESSAGE_COUNTER</Column>

<Column>MAX_MESSAGE_SIZE</Column>

<Column>MIN_MESSAGE_SIZE</Column>

<Column>AVG_MESSAGE_SIZE</Column>

<Column>MAX_RETRY_COUNTER</Column>

<Column>MIN_RETRY_COUNTER</Column>

<Column>AVG_RETRY_COUNTER</Column>

<Column>AVG_PROCESSING_TIME</Column>

<Column>TOTAL_PROCESSING_TIME</Column>

<Column>MEASURING_POINTS</Column>

</ColumnNames>

<DataRows>

<Row>

<Entry>HTTPSender_TS1</Entry>

<Entry>SOAPReceiver_Proxy</Entry>

<Entry>OUTBOUND</Entry>

<Entry>BE</Entry>

<Entry>1234567</Entry>

<Entry></Entry>

<Entry>BSR_SAP</Entry>

<Entry></Entry>

<Entry>TS_SERVER</Entry>

<Entry>ProcessingIn</Entry>

<Entry>urn:in2db.com:ERP:Test</Entry>

<Entry>|BSR_SAP|ProcessingOut||</Entry>

<Entry>3</Entry>

<Entry>2396</Entry>

<Entry>1391</Entry>

<Entry>1817</Entry>

<Entry>0</Entry>

<Entry>0</Entry>

<Entry>0.00</Entry>

<Entry>524476</Entry>

<Entry>1573428</Entry>

<Entry> <MeasuringPoints><MP> <Name>MS:module_in:CallSapAdapter</Name> <Sequence>1</Sequence> <Max>394</Max> <Avg>349</Avg> <Min>261</Min></MP><MP> <Name>MS:stage:SI</Name> <Sequence>2</Sequence> <Max>12</Max> <Avg>9</Avg> <Min>8</Min></MP><MP> <Name>MS:stage:BI</Name> <Sequence>3</Sequence> <Max>73</Max> <Avg>60</Avg> <Min>52</Min></MP><MP> <Name>MS:stage:VI</Name> <Sequence>4</Sequence> <Max>12</Max> <Avg>8</Avg> <Min>7</Min></MP><MP> <Name>MS:stage:MS</Name> <Sequence>5</Sequence> <Max>1266</Max> <Avg>1050</Avg> <Min>771</Min></MP><MP> <Name>MS:Message_Put_In_Store</Name> <Sequence>6</Sequence> <Max>155</Max> <Avg>112</Avg> <Min>90</Min></MP><MP> <Name>MS:Message_Put_In_Disp_Queue</Name> <Sequence>7</Sequence> <Max>2328</Max> <Avg>836</Avg> <Min>82</Min></MP><MP> <Name>MS:Message_Wait_In_Disp_Queue</Name> <Sequence>8</Sequence> <Max>1445</Max> <Avg>630</Avg> <Min>203</Min></MP><MP> <Name>MS:Message_Put_In_Queue</Name> <Sequence>9</Sequence> <Max>44</Max> <Avg>42</Avg> <Min>42</Min></MP><MP> <Name>MS:Message_Wait_In_Queue</Name> <Sequence>10</Sequence> <Max>323</Max> <Avg>263</Avg> <Min>195</Min></MP><MP> <Name>MS:Message_Update_Status</Name> <Sequence>11</Sequence> <Max>233</Max> <Avg>166</Avg> <Min>128</Min></MP><MP> <Name>MS:stage:AM</Name> <Sequence>12</Sequence> <Max>114891</Max> <Avg>41811</Avg> <Min>2755</Min></MP><MP> <Name>MS:stage:SO</Name> <Sequence>13</Sequence> <Max>59</Max> <Avg>40</Avg> <Min>24</Min></MP><MP> <Name>MS:stage:VO</Name> <Sequence>14</Sequence> <Max>44</Max> <Avg>33</Avg> <Min>25</Min></MP><MP> <Name>MS:stage:AT</Name> <Sequence>15</Sequence> <Max>468</Max> <Avg>364</Avg> <Min>304</Min></MP><MP> <Name>MS:module_out:sap.com/com.sap.aii.af.soapadapter/XISOAPAdapterBean</Name> <Sequence>16</Sequence> <Max>1008279</Max> <Avg>478000</Avg> <Min>131434</Min></MP><MP> <Name>MS:Resp:stage:BI</Name> <Sequence>17</Sequence> <Max>575</Max> <Avg>481</Avg> <Min>395</Min></MP><MP> <Name>MS:Resp:Message_Put_In_Store</Name> <Sequence>18</Sequence> <Max>157</Max> <Avg>136</Avg> <Min>121</Min></MP><MP> <Name>MS:Resp:Message_Update_Status</Name> <Sequence>19</Sequence> <Max>89</Max> <Avg>86</Avg> <Min>81</Min></MP> </MeasuringPoints></Entry>

</Row>

<Row>

<Entry>SOAP_Sender</Entry>

<Entry>SOAPReceiver_Proxy</Entry>

<Entry>OUTBOUND</Entry>

<Entry>EO</Entry>

<Entry>1234567</Entry>

<Entry></Entry>

<Entry>BSR_SAP</Entry>

<Entry></Entry>

<Entry>TS_SERVER</Entry>

<Entry>Confirmation_In</Entry>

<Entry>http://sap.com/xi/IS-U/Global2</Entry>

<Entry>|BSR_SAP|Confirmation_Out||</Entry>

<Entry>48</Entry>

<Entry>672</Entry>

<Entry>672</Entry>

<Entry>672</Entry>

<Entry>0</Entry>

<Entry>0</Entry>

<Entry>0.00</Entry>

<Entry>89338</Entry>

<Entry>4288227</Entry>

<Entry> <MeasuringPoints><MP> <Name>MS:SOAPHandler.processSOAPtoXMB</Name> <Sequence>1</Sequence> <Max>488</Max> <Avg>296</Avg> <Min>190</Min></MP><MP> <Name>MS:module_in:CallSapAdapter</Name> <Sequence>2</Sequence> <Max>521</Max> <Avg>211</Avg> <Min>144</Min></MP><MP> <Name>MS:stage:SI</Name> <Sequence>3</Sequence> <Max>55</Max> <Avg>6</Avg> <Min>5</Min></MP><MP> <Name>MS:stage:BI</Name> <Sequence>4</Sequence> <Max>195</Max> <Avg>37</Avg> <Min>26</Min></MP><MP> <Name>MS:stage:VI</Name> <Sequence>5</Sequence> <Max>28</Max> <Avg>5</Avg> <Min>4</Min></MP><MP> <Name>MS:stage:MS</Name> <Sequence>6</Sequence> <Max>7495</Max> <Avg>2675</Avg> <Min>1340</Min></MP><MP> <Name>MS:Message_Put_In_Store</Name> <Sequence>7</Sequence> <Max>28648</Max> <Avg>8891</Avg> <Min>6457</Min></MP><MP> <Name>MS:Message_Put_In_Disp_Queue</Name> <Sequence>8</Sequence> <Max>12290</Max> <Avg>6102</Avg> <Min>3558</Min></MP><MP> <Name>MS:Message_Put_In_Queue</Name> <Sequence>9</Sequence> <Max>191</Max> <Avg>46</Avg> <Min>21</Min></MP><MP> <Name>MS:Message_Wait_In_Queue</Name> <Sequence>10</Sequence> <Max>401</Max> <Avg>229</Avg> <Min>153</Min></MP><MP> <Name>MS:Message_Wait_In_Disp_Queue</Name> <Sequence>11</Sequence> <Max>18855</Max> <Avg>5289</Avg> <Min>8</Min></MP><MP> <Name>MS:Message_Update_Status</Name> <Sequence>12</Sequence> <Max>25237</Max> <Avg>9398</Avg> <Min>5056</Min></MP><MP> <Name>MS:stage:AM</Name> <Sequence>13</Sequence> <Max>390</Max> <Avg>183</Avg> <Min>124</Min></MP><MP> <Name>MS:stage:SO</Name> <Sequence>14</Sequence> <Max>102</Max> <Avg>17</Avg> <Min>16</Min></MP><MP> <Name>MS:stage:VO</Name> <Sequence>15</Sequence> <Max>155</Max> <Avg>22</Avg> <Min>17</Min></MP><MP> <Name>MS:stage:AT</Name> <Sequence>16</Sequence> <Max>1813</Max> <Avg>332</Avg> <Min>205</Min></MP><MP> <Name>MS:module_out:sap.com/com.sap.aii.af.soapadapter/XISOAPAdapterBean</Name> <Sequence>17</Sequence> <Max>91602</Max> <Avg>55588</Avg> <Min>46038</Min></MP> </MeasuringPoints></Entry>

</Row>

</DataRows>

</Data>

</PerformanceDataQueryResults>

The XML data is complex and contains nested tables for the “MeasuringPoint” elements. This is not something that is possible to extract using the Excel data import GUI alone. You will need to use my code 😉

In the code there are two points that do the required pre-processing to transpose, fillUp and then remove some data parts, returning it in the required format so that you can report on it with all the “MeasuringPoints” if you need them.

Could the above be done in another tool? Probably. But everyone has Excel.

Let’s put my Power Query code into the Excel query editor:

let

// Uncomment to use a URL source,

// Source = Xml.Tables(Web.Contents("https://sapts1app1:50001/mdt/performancedataqueryservlet?component=af.ts1.sapts1db01&begin=2021-01-10T00:00:00.000Z&end=2021-01-11T00:00:00.000Z")),

Source = Xml.Tables(File.Contents("C:\Users\darryl\Documents\Projects\po-perf-metrics\performancedataqueryservlet-1.xml")),

Data = Source{1}[Table],

DataRows = Data{1}[Table],

Row = DataRows{0}[Table],

#"Expanded Entry" = Table.TransformColumns(Row, {"Entry", each Table.RemoveLastN(Table.FillUp(Table.Transpose(_), {"Column22"}),1)}),

#"Expanded Entry1" = Table.ExpandTableColumn(#"Expanded Entry", "Entry", {"Column1", "Column2", "Column3", "Column4", "Column5", "Column6", "Column7", "Column8", "Column9", "Column10", "Column11", "Column12", "Column13", "Column14", "Column15", "Column16", "Column17", "Column18", "Column19", "Column20", "Column21", "Column22"}, {"Entry.Column1", "Entry.Column2", "Entry.Column3", "Entry.Column4", "Entry.Column5", "Entry.Column6", "Entry.Column7", "Entry.Column8", "Entry.Column9", "Entry.Column10", "Entry.Column11", "Entry.Column12", "Entry.Column13", "Entry.Column14", "Entry.Column15", "Entry.Column16", "Entry.Column17", "Entry.Column18", "Entry.Column19", "Entry.Column20", "Entry.Column21", "Entry.Column22"}),

#"Renamed Columns" = Table.RenameColumns(#"Expanded Entry1",{{"Entry.Column1", "INBOUND_CHANNEL"}, {"Entry.Column2", "OUTBOUND_CHANNEL"}, {"Entry.Column3", "DIRECTION"}, {"Entry.Column4", "DELIVERY_SEMANTICS"}, {"Entry.Column5", "SERVER_NODE"}, {"Entry.Column6", "FROM_PARTY_NAME"}, {"Entry.Column7", "FROM_SERVICE_NAME"}, {"Entry.Column8", "TO_PARTY_NAME"}, {"Entry.Column9", "TO_SERVICE_NAME"}, {"Entry.Column10", "ACTION_NAME"}, {"Entry.Column11", "ACTION_TYPE"}, {"Entry.Column12", "SCENARIO_IDENTIFIER"}, {"Entry.Column13", "MESSAGE_COUNTER"}, {"Entry.Column14", "MAX_MESSAGE_SIZE"}, {"Entry.Column15", "MIN_MESSAGE_SIZE"}, {"Entry.Column16", "AVG_MESSAGE_SIZE"}, {"Entry.Column17", "MAX_RETRY_COUNTER"}, {"Entry.Column18", "MIN_RETRY_COUNTER"}, {"Entry.Column19", "AVG_RETRY_COUNTER"}, {"Entry.Column20", "AVG_PROCESSING_TIME"}, {"Entry.Column21", "TOTAL_PROCESSING_TIME"}}),

#"Expanded MP" = Table.ExpandTableColumn(#"Renamed Columns", "Entry.Column22", {"MP"}, {"Entry.Column22.MP"})

in

#"Expanded MP"In the code above, you will notice the “Source=” is using a local file. You can uncomment the “Web” source and comment out the “File” source if you are pulling the data direct via HTTP.

With the Power Query code entered into the editor, check there are no syntax errors and click “Done”:

When querying the data directly over HTTP you will need to edit the credentials at this point.

In the credentials screen, enter the “Basic” username and password to use.

The data will be displayed.

In my sample I see two rows of data:

At the initial top-level, you will see we have the Average Processing Time (in milliseconds) for each interface:

We also have an additional column at the end which contains embedded tables of additional metric data for each specific stage of processing within PI/PO:

By clicking the double arrows in the top of the header for the “Entry.Column22.MP” we can expand the embedded table (should you wish to), and you will see that it presents the following additional columns of data:

When you click “OK” it adds those columns to the main list, but it will create additional rows of data for each of those additional columns that have been expanded:

With the above data expanded, we can really produce some nice graphs.

Here’s an example showing the breakdown of average response time for each of those processing stages.

First I put them into a pivot table and apply an average to the “Avg” column for each of the “Name” column values :

Then I create a pie chart for the data and we can report on which processing stage inside PI/PO is consuming the most time:

By applying additional graphs and filters we could report on individual interfaces’ overall response times, then allow drill-down into the specifics.

Any Potential Issues?

There is one big caveat with the above process of pulling the data from the servlet.

The servlet is extracting data from a memory cache area inside PI/PO.

This cache is an LRU cache, meaning it has a limited size and gets overwritten when it becomes full.

The quantity of data is therefore limited.

It is possible that you could switch on the database persistence (logging) of successful messages in PI/PO, but this has a detrimental impact to message throughput performance and is not recommended by SAP for production systems.

To try and get around the cache limitations, my advice would be to extract the data using the smallest granular frequency that the servlet allows (MINUTE), and save to a disk file which could be accessible from Excel somehow.

Another method could be to use Microsoft Power Automate (previously MS Flow) to pull the file into Sharepoint or other storage.

By extracting the data frequently, you are trying to ensure that you have it stored before the cache is cleared, but also you are building a time-series from which you could potentially include into your reporting tool.

A time-series would allow you to scroll through data in windows of at least 15 mins in size. Not bad.

Summary

We identified the important areas of data collection in PI/PO (and Netweaver in general) to allow response times to be seen.

We also noted that HTTP response codes such as 503 should be obtained from the first outermost point of the Netweaver stack (if possible).

We saw an example of using the “PerformanceDataQueryServlet” to pull data from the PI/PO memory cache and transformed it using Power Query to allow detailed reporting on the response times.

I created a demonstration graph from a pivot table using my sample data, which showed a possible drill-down in the response time of individual processing stages within SAP PI/PO.

Hopefully I have given you some ideas on for how you can solve your PI/PO performance reporting requirement.

You may also be interested in: