Running SAP Netweaver A(SCS)/ERS in a Pacemaker cluster in Azure, AWS or GCP or potentially even on-premise?

Be aware, there is a configuration issue in the current version of the Microsoft, AWS, GCP and SUSE documentation for the Pacemaker cluster configuration (on SLES with non-native SystemD Startup Framework) for the SAP Enqueue Replication Server (ERS) instance primitive in an ENSA1 (classic Enqueue) architecture.

Having double checked with both the SAP and SUSE documentation (I don’t have access to check RHEL) I believe that the SAP certified SUSE cluster design is correct, but that the instructions to configure it are not inline with the SAP recommendations.

In this post I explain the issue, what the impact is and how to potentially correct it.

NOTE: This is for SLES systems that are not using the new “Native Startup Framework for SystemD” Services for SAP, see here.

Don’t put your SAP system at risk, get a big coffee and let me explain below.

SAP ASCS and High Availability

The Highly Available (HA) cluster configuration for the SAP ABAP Central Services (ASCS) instance is critical to successful operation of the SAP system, with the SAP Enqueue (EN) process being the guardian of the SAP application level logical locks (locks on business objects) and the SAP Enqueue Replication Server (ERS) instance being the guarantor for the EN when a cluster failover occurs.

In a two-node HA SAP ASCS/ERS setup, the EN process is on the first server node and the ERS instance is on the second node.

The EN proccess (within the ASCS instance) is replicating to the ERS instance (or rather, the ERS is pulling from the EN process on a loop).

If the EN process goes down, the surrounding cluster is usually configured to fail over the ASCS instance to the secondary cluster node where the ERS instance is running. The EN process will then take ownership of the replica in-memory lock table:

What is an ideal Architecture design?

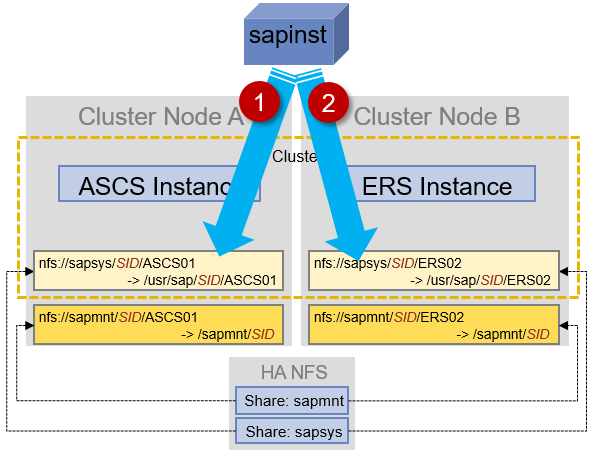

In an ideal design, according to the SUSE documentation here.

(again, I’m sure RHEL is similar but if someone can verify that would be great), the ASCS and ERS instances are installed on cluster controlled disk storage on both cluster nodes in the cluster:

We mount the /sapmnt (and potentially /usr/sap/SID/SYS) file system from NFS storage, but these file systems are *not* cluster controlled file systems.

The above design ensures that the ASCS and ERS instances have access to their “local” file system areas before they are started by the cluster. In the event of a failover from node A to node B, the cluster ensures the relevant file system area is present before starting the SAP instance.

We can confirm this by looking at the SAP official filesystem layout design for an HA system here:

What is Microsoft’s Design in Azure?

Let’s look at the cluster design on Microsoft’s documentation here:

It clearly shows that /usr/sap/SID/ASCS and /usr/sap/SID/ERS is being stored on the HA NFS storage.

So this matches with the SAP design.

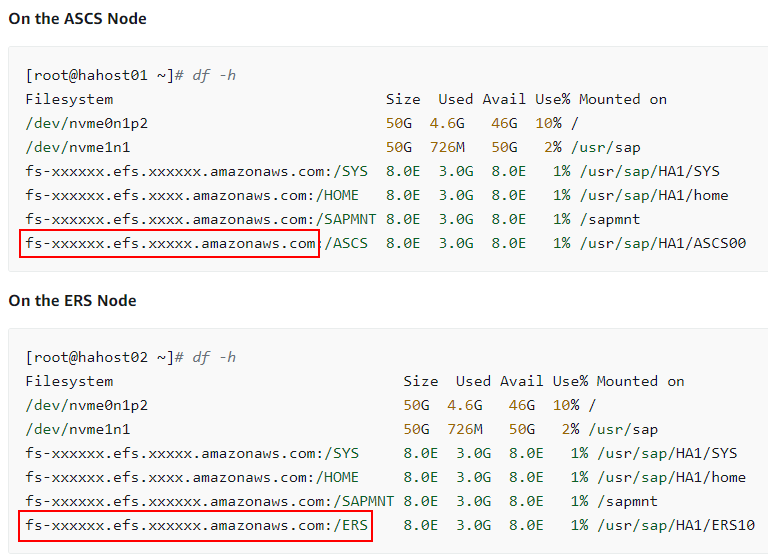

What is Amazon’s design in AWS?

If we look at the documentation provided by Amazon here:

We can see that they are using EFS (Elastic File Storage) for the ASCS and ERS instance locations, which is mounted using the NFS protocol.

So the design is the same as Microsoft’s and again this matches the SAP design.

What is Google’s design in GCP?

If we look at the documentation provided by Google, the diagram doesn’t show clearly how the filesystems are provided for the ASCS and ERS, so we have to look further into the configuration step here:

The above shows the preparation of the NFS storage.

Later in the process we see in the cluster configuration that the ASCS and ERS file systems are cluster controlled:

and

The above is going to mount /usr/sap/SID/ASCS## or /usr/sap/SID/ERS## and they will be cluster controlled.

Therefore the GCP design is also matching the SAP, Azure and AWS designs.

Where is the Configuration Issue?

So far we have:

- Understood that /sapmnt is not a cluster controlled file system.

- established that Azure, AWS, GCP and the SUSE documentation are in alignment regarding the cluster controlled file systems for the ASCS and the ERS.

Now we need to pay closer attention to the cluster configuration of the SAP instances themselves.

The Pacemaker cluster configuration of a SAP instance involves 3 (or more) different cluster resources: Networking, Storage and SAP instance. With the “SAP Instance” resource being the actual running SAP software process(es).

Within the cluster the “SAP Instance” Resource Adapter (RA) is actually called “SAPInstance” and in the cluster configuration is takes a number of parameters specific to the SAP instance that it is controlling.

One of these parameters is called “START_PROFILE” which should point to the SAP instance profile.

The SAP instance profile file is an executable script (on Linux) that contains all the required commands and settings to start (and stop) the SAP instance in the correct way and also contains required configuration for the instance once it has started. It is needed by the SAP Instance Agent (sapstartsrv) and the executable that is the actual SAP instance (ASCS binaries: msg_server and enserver, ERS binary: enrepserver).

Without the profile file, the SAP Instance Agent cannot operate a SAP instance and the process that is the instance itself is unable to read any required parameter settings.

Usually, the SAP Instance Agent knows where to find the instance profile because at server startup, the sapinit script (triggered through either systemd unit-file or the old Sys-V start scripts) will execute the entries in the file /usr/sap/sapservices.

These entries call the SAP Instance Agent for each SAP instance and they pass the location of the start profile.

Here’s a diagram from a prior blog post which shows what I mean:

In our two-node cluster setup example, after a node is started, we will see 2 SAP Instance Agents running, one for ASCS and one for ERS. This happens no matter what the cluster configuration is. The instance agents are always started and they always start with the profile file specific in the /usr/sap/sapservices file.

NOTE: This changes in the latest cluster setup in SLES 15, which is a pure SystemD controlled SAP Instance Agent start process.

The /usr/sap/sapservices file is created at installation time. So it contains the entries that the SAP Software Provisioning Manager has created.

The ASCS instance entry in the sapeservices file, looks like so:

LD_LIBRARY_PATH=/usr/sap/SID/ASCS01/exe:$LD_LIBRARY_PATH; export LD_LIBRARY_PATH; /usr/sap/SID/ASCS01/exe/sapstartsrv pf=/usr/sap/SID/SYS/profile/SID_ASCS01_myhost -D -u sidadm

But the ERS instance entry looks slightly different:

LD_LIBRARY_PATH=/usr/sap/SID/ERS11/exe:$LD_LIBRARY_PATH; export LD_LIBRARY_PATH; /usr/sap/SID/ERS11/exe/sapstartsrv pf=/usr/sap/SID/ERS11/profile/SID_ERS11_myhost -D -u sidadm

If we compare the “pf=” (profile) parameter entry between ASCS and ERS after installation, you will notice the specified ERS instance profile location is not using the location accessible under the link /usr/sap/SID/SYS.

Since we have already investigated the file system layout, we know that the “SYS” location only contains links, which point to other locations. In this case, the ASCS is looking for sub-directory “profile”, which is a link to directory /sapmnt/SID/profile.

The ERS on the other hand, is using a local copy of the profile.

This is something that usually would go unnoticed, but the ERS must be using a local copy of the profile for a reason?

Looking at SAP notes, we find SAP note 2954193, which explains that an ERS instance in an ENSA1 architecture should be started using a local instance profile file:

Important part: “this configuration must not be changed“.

Very interesting. It doesn’t go into any further detail, but from what we have understood about the criticality of the SAP ASCS and ERS instances, we have to assume that SAP have tested a specific failure scenario (maybe failure of sapmnt) and deemed it necessary to ensure that the ERS instance profile is always available.

I can’t think of any other reason (maybe you can?).

The next question, how does that ERS profile get created in that local “profile” location? It’s not something the other instances do.

After some testing it would appear that the “.lst” file in the sapmnt location is used by the SAP Instance Agent to determine which files to copy at instance startup:

It is important to notice that the DEFAULT.PFL is also copied by the above process.

Make sure you don’t go removing that “.lst” file from “/sapmnt/SID/profile”, otherwise those local profiles will be outdated!

To summarise in a nice diagram, this setup is BAD:

This is GOOD:

What about sapservices?

When we discussed the start process of the server, we just mentioned that the SAP Instance Agent is always started from the /usr/sap/sapervices file settings. We also noted how in the /usr/sap/sapservices file, the settings for the ERS profile file location are correct.

So why would the cluster affect the profile location of the ERS at all?

It’s a good question, and the answer is not a simple explanation because it requires a specific circumstance to happen in the lifecycle of the cluster.

Here’s the specific circumstance:

- Cluster starts, the ERS Instance Agent was already running and so it has the correct profile.

- We can run “ps -ef | grep ERS” and we would see the “er” process has the correct “pf=/path-to-profile” and correctly pointing to the local copy of the profile.

- If the ERS instance somehow is now terminated (example: “rm /tmp/.sapstream50023”) then the cluster will restart the whole SAP Instance Agent of the ERS (without a cluster failover).

- At this point, the cluster starts the ERS Instance Agent with the wrong profile location, and the “er” binary now inherits this when it starts. This will be inplace until the next complete shutdown of the ERS Instance Agent.

As you can see, it’s not an easy situation to detect, because from an outside perspective, the ERS died and was successfully restarted.

Except it was restarted with the incorrect profile location.

If a subsequent failure happens to the sapmnt file system, this would render the ERS at risk (we don’t know the exact risk because it is not mentioned in the referenced SAP note that we noted earlier).

What is more, the ERS instance is not monitorable using SAP Solution Manager (out-of-the-box), you would need to create your own monitoring element for it.

Which Documentation has this Issue?

Now we know there is a difference required for the ERS instance profile location, we need to go back and look at how the cluster configurations have been performed, because of “this configuration must not be changed”!

Let’s look first at the SUSE documentation here:

Alright, the above would seem to show that “/sapmnt” is used for the ERS.

That’s not good as it doesn’t comply with the SAP note.

How about the Microsoft documentation for Azure:

No that’s also using /sapmnt for the ERS profile location. That’s not right either.

Looking at the AWS document now:

Still /sapmnt for the ERS.

Finally, let’s look at the GCP document:

This one is a little harder, but essentially, the proposed variable “PATH_TO_PROFILE” looks like it is bound to the same one as the ASCS instance defined just above it, so I’m going to say, it’s going to be “/sapmnt” because when you try and change it on one, it forces the same on the other:

We can say that all documentation available for the main hyperscalers, provides an incorrect configuration of the cluster, which could cause the ERS to operate in a way that is strongly not recommended by SAP.

Should we correct the issue and How can we correct the issue?

I have reported my finding to both Microsoft and SUSE, so I would expect them to validate.

However, in the past when providing such feedback, the relevant SAP note has been updated to exclude or invalidate the information altogether, rather than instigating the effort of fixing or adjusting any incorrect configuration documentation.

That’s just the way it is and it’s not my product, so I have no say in the solution, I can only report on what I know is correct at the time.

If you would like to correct the issue using the information known at this point in time, then the steps to be taken to validate that the ERS is operating and configured in the correct way are provided in a high-level below:

- Check the cluster configuration for the ERS instance to ensure it is using the local copy of the instance profile.

- Check the current profile location used by the running ERS Instance Agent (on both nodes in the cluster).

- Double check someone has not adjusted the /usr/sap/sapservices file incorrectly (on both nodes in the cluster).

- Check that the instance profile “.lst” file exists in the /sapmnt/SID/profile directory, so that the ERS Instance Agent can copy the latest versions of the profile and the DEFAULT.PFL to the local directory location when it next starts.

- Check for any differences between the current local profile files and the files in the /sapmnt/SID/profile directory and consider executing the “sapcpe” process manually.

Thanks for reading.

You may also be interested in: