Scenario: You want to prototype something and you don’t have the hardware available for a new prototype HANA database. Instead, you can use the power of a virtual machine to get a HANA SPS07 database up and running in less than 30 minutes.

Well, it was supposed to be 30 minutes, and it sure can be 30 minutes, providing you have the right equipment to hand.

As I found out, working on a slow disk, limited CPU system, extended this to 2 hours from start to finish.

Here’s how…

Update: 09/2014, if you’re using SPS08 (rev 80+) then this will also work, but people have had issues trying to perform the install with the media converted to an ISO. Instead, just use the VMWare “Shared Folders” feature to share the install files from your PC into the SUSE VM.

What you’ll need:

– SAP HANA In Memory DB 1.0 SPS07 install media from SAP Software Download Centre. This is media ID 51047423.

– The SUSE Linux for SAP v11 sp02 or sp03 install media (ISO).

– A valid license for the HANA database (platform edition or enterprise edition).

– SAP HANA Studio rev 70 installed on a PC which can access the virtual HANA server you’re going to create (the Studio install media is contained within the HANA install media DVD, or you can download it separately).

– A host machine to host the virtual machine. You need at least 20GB of RAM, although if you configure your pagefile (in Windows) on SSD or flash, you could get away with 16GB (I did !!!).

What we’re going to do:

– We’ll create a basic SUSE Linux for SAP virtual machine. You can use any host OS, I’m using Windows 7 64bit.

– Because most people are using VMs to maximise infrastructure, we’ll go through a couple of steps to really reduce the O/S memory footprint (we disable X11 as one of these steps). We get this whole thing running in less than 16GB of RAM in the end.

– We’ll install a basic HANA database.

– We disable the XS-Engine (saving a lot of memory) which you don’t have to do if you absolutely need it. The XS-Engine is a lightweight application server for hosting the next generation HANA based APPS.

START THE CLOCK!

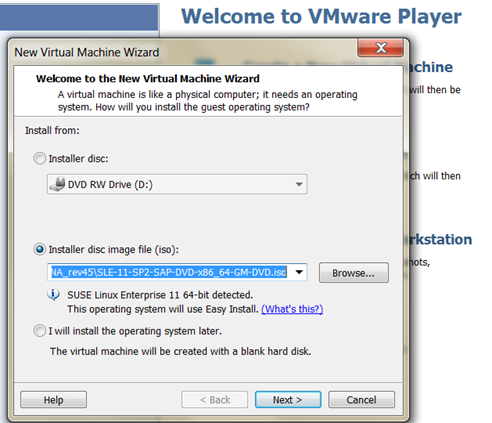

Create your basic VM for SUSE Enterprise Linux (I’m using SUSE Linux for SAP SP2).

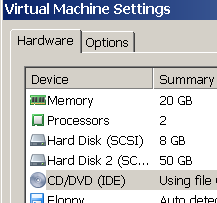

It will need the following resources:

– More than 16GB of RAM (preferably 24GB) on the physical host machine .

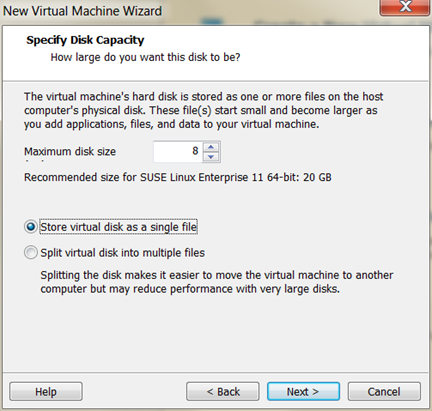

– 8GB of disk for the O/S.

– 50GB of disk for the basic HANA DB with nothing in it, plus the installed software.

– 20GB of disk on the physical host for swapping (if you don’t have 20GB of RAM).

– 2 CPUs if you can spare the cores.

– A hostname and fully qualified domain name.

– Some form of networking (use “Bridged” if you need to access this across the network).

Let’s create the VM and set the CDROM to point to the SUSE Linux SP2 install DVD ISO file:

Confirm the VM full name, your username and your preferred password (for the username and for root):

Set the location to store your VM files:

Set the initial hard disk to have 8GB and store it in one big file (it’s up to you really):

Now customise the hardware:

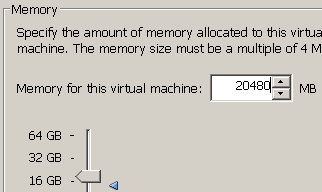

Set the RAM to 20GB or more (you really need 24GB of RAM, but I have only 16GB and will be ready for some serious swapping). At a minimum the VM should have 18GB of RAM for day-to-day running:

Give the VM at least 2 cores:

Use bridged networking if you need to access over the network, but only if you have DHCP enabled or you’re a network guru:

Start the VM.

We’re off.

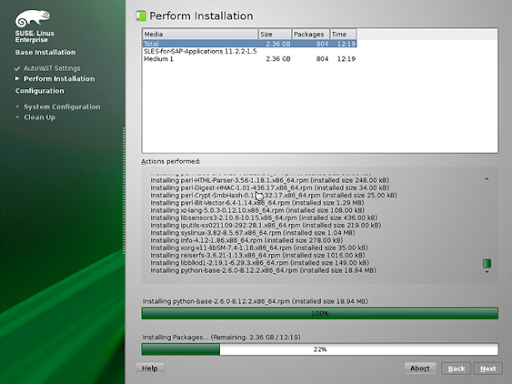

The SUSE install took 12.5 minutes in my testing on a core i5 (unfortunately only 3rd gen 🙁 ):

Oh look, it reckons that we have 12mins 19 seconds left until completed:

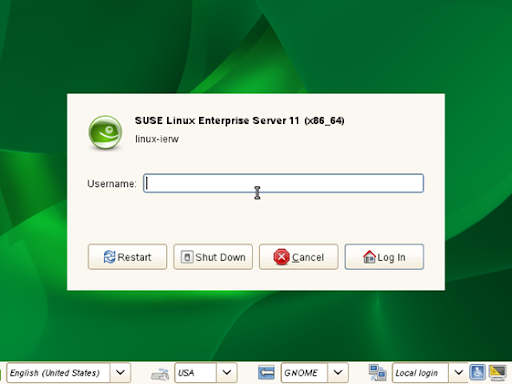

Boom, SUSE is up!

Shutdown the VM again so that we can add the second hard disk:

It’s SCSI as recommended:

We set it to max out at 50GB (set yours however large you think you will need it, but we will create this in a volume group so you can always add more hard disks and just expand the volume group in SUSE):

NOTE: If you’re going to be moving this VM around using USB sticks, you may want to choose the “Split…” option so that the files might fit.

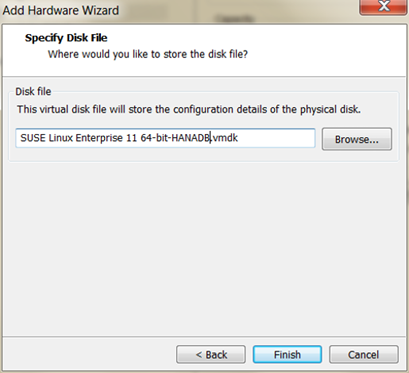

Give the VMDK a file name (I’ve added “HANADB” so I can potentially plug and play this disk to other VMs):

Also re-add the CDROM drive (mine went missing after the install, probably due to VMWare player’s Easy Install process):

Configure the CDROM to point to the ISO for the SUSE install DVD again.

Start the VM again:

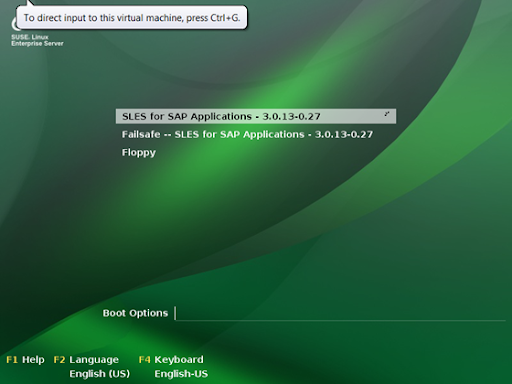

Notice the Kernel version we have is 3.0.13-0.27:

From the bottom bar in SUSE, start YAST and select the “Network Settings” item:

Disable IPv6 on the “Global Options” tab:

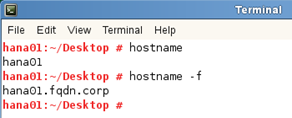

On the hostname tab set the hostname and FQDN:

Apply those changes and quit from YAST.

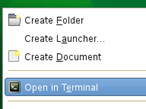

Right click the desktop and open a Terminal:

Add your specific IP address and hostname (fqdn) plus the short hostname to the /etc/hosts file using vi:

Save the changes to the file and quit vi.

Reboot the HANA VM from the terminal using “shutdown -r now”.

Once it comes back up, you need to check the hostname resolution:

According to the HANA installation guide I’m following, we need to apply some recommended settings following SAP note 1824819:

So we run the command to disable the transparent huge pages:

# echo never > /sys/kernel/mm/transparent_hugepage/enabled

I checked the C-state and it was fine on my Intel CPU.

We’re not using XFS so I don’t need to bother with the rest, I don’t want to patch my GlibC, but feel free to if you wish.

15 MINUTES HAVE NOW ELAPSED!

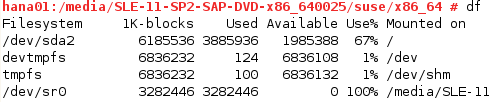

A quick recap, we should have working SUSE VM, it should be booted and you should have the SUSE DVD loaded in the virtual CDROM.

Open a new Terminal window:

Now install the following Java 1.6 packages from the source distribution (these are part of the HANA install guide for sp07, page 15):

# cd /media/SLE-11-SP2-SAP-DVD-x86_640025/suse/x86_64

# rpm -i –nodeps java-1_6_0-ibm-*

The rest of the requirements are already installed in SUSE EL 11 sp2 for SAP.

Now we create the volume group for the HANA database and software.

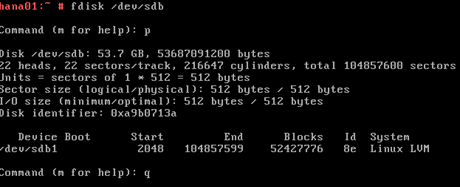

First check which disk you’re using for the O/S:

So, I’m using “sda” as my primary disk.

This means that “sdb” will be my HANA disk

.

WARNING: Adjust the commands below to the finding above, so you use the correct unused disk and don’t overwrite your root disk.

Create the new partition on the disk:

# fdisk /dev/<your disk device e.g. sdb>

Then enter:

n <return>

p <return>

1 <return>

<return>

<return>

t <return>

1 <return>

8e <return>

w <return>

At the end, the fdisk command exits.

Re-run fdisk to check your new partition:

Create the volume group and logical volume:

# pvcreate /dev/sdb1

# vgcreate /dev/volHANA /dev/sdb1

# lvcreate -L 51072M -n lvHANA1 volHANA

Format the new logical volume:

# mkfs.ext3 /dev/volHANA/lvHANA1

Mount the new partition:

# mkdir /hana

# echo “/dev/volHANA/lvHANA1 /hana ext3 defaults 0 0” >> /etc/fstab

# mount -a

Check the new partition:

# df -h /hana

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/volHANA-lvHANA1 50G 180M 47G 1% /hana

Create the required directory locations (H10 is out instance name):

# mkdir -p /hana/data/H10 /hana/log/H10 /hana/shared

Now set the LVM to start at boot:

# chkconfig –level 235 boot.lvm on

Now we’ve got somewhere to create our HANA database and put the software.

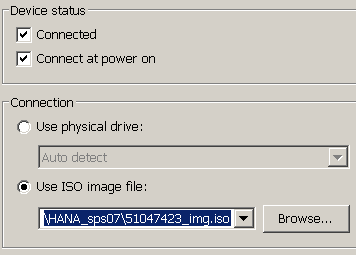

To perform the HANA install, I’ve converted my downloaded HANA install media into an ISO file that I can simply mount as a CD/DVD into the VMware tool.

Instead of this method, you could alternatively use the Shared Folders capability and simply extract the file to your local PC, sharing the directory location through VMware to the guest O/S. The outcome will be the same.

Mount the ISO file (HANA install media, from which I’ve created an ISO for ease of use).

You can do this by presenting the ISO file as the virtual CDROM from within VMWare.

Open the properties for the virtual machine and ensure that you select the CDROM device:

On the right-hand side, enable the device to be connected and powered on, then browse for the location of the ISO file on your PC:

Apply the settings to the VM.

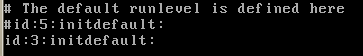

Prior to starting the install, we can reduce our memory footprint of the O/S by over 1GB.

Use vi to change the file /etc/inittab so that the default runlevel is 3 (no X-windows):

Also, disable 4 services that are more than likely not needed and just consume memory:

Disable VMware thin printing:

# chkconfig vmware-tools-thinprint off

Disable Linux printing:

# chkconfig cups off

Disable Linux auditing:

# chkconfig auditd off

Disable Linux eMail SMTP daemon:

# chkconfig postfix off

Disable sound:

# chkconfig alsasound off

Disable SMBFS / CIFS:

# chkconfig smbfs off

Disable NFS ( you might need it…):

# chkconfig nfs off

Disable splash screen:

# chkconfig splash off

Disable the Machine Check Events Logging capture:

# chkconfig mcelog off

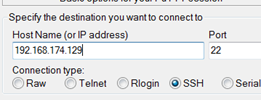

Double check the IP address of your VM:

# ifconfig | grep inet

Your IP address should be listed (you can see mine is 192.168.174.129).

If you don’t have one, then your VM is not quite setup correctly in the VMWare properties or your networking configuration is not correct, or you don’t have a DHCP server on your local network, or your network security is preventing your VM from registering it’s MAC address. It’s complex.

Assuming that you have an IP address, check that you can connect to the SSH server in your VM using PUTTY :

Enter the IP address of your VM server:

Log into the server as root:

From this point onwards, it is advisable to use the PUTTY client tool to connect, as this provides a more feature rich access to your server environment, than the basic VMWare console connection.

You now need to restart the virtual server:

# shutdown -r now

Once the server is back, re-connect with PUTTY.

We will not use the GUI for installing the HANA system (hdblcmgui), because this takes more time and more memory away from our basic requirement of a HANA DB.

Mount the cdrom inside the SUSE O/S:

# mount /dev/cdrom /media

Change to the install location inside the VM and then run the hdbinst tool (this is the lowest common denominator regarding HDB installation):

# cd /media/DATA_UNITS/HDB_SERVER_LINUX_X86_64

# ./hdbinst –ignore=check_diskspace,check_min_mem

You will be prompted for certain pieces of information. Below is what was entered:

Installation Path: /hana/shared

System ID: H10

Instance Number: 10

System Administrator Password: hanahana

System Administrator Home Dir: /usr/sap/H10/home

System Administrator ID: 10001

System Administrator Shell: /bin/sh

Data Volumes: /hana/data/H10

Log Volumes: /hana/log/H10

Database SYSTEM user password: Hanahana1

Restart instance after reboot: N

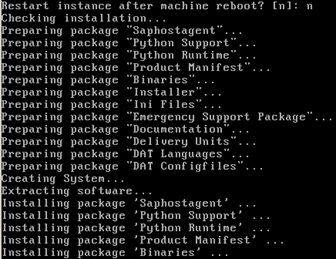

Installation will begin:

My HANA DB install took approximately 1 hour 20 minutes on a Core i5 with 16GB RAM, 5400rpm HDD (encrypted) plus a large pagefile (not encrypted):

****** OPTIONAL ********

After the install completed, I then followed SAP note 1697613 to remove the XS-Engine from the landscape to reduce the memory footprint even further:

From HANA Studio, right click the system and launch the SQL Console:

Run the following SQL statements (changing the host name accordingly):

select host from m_services where service_name = ‘xsengine’

select VOLUME_ID from m_volumes where service_name = ‘xsengine’

ALTER SYSTEM ALTER CONFIGURATION (‘daemon.ini’, ‘host’, ‘hana01’) UNSET (‘xsengine’,’instances’) WITH RECONFIGURE

ALTER SYSTEM ALTER CONFIGURATION (‘topology.ini’, ‘system’) UNSET (‘/host/hana01’, ‘xsengine’) WITH RECONFIGURE

NOTE: Change the value “<NUM>” below to be what is reported as the volume number in the second SQL statement above.

ALTER SYSTEM ALTER CONFIGURATION (‘topology.ini’, ‘system’) UNSET (‘/volumes’, ‘<NUM>’) WITH RECONFIGURE

The XS-Engine process will disappear.

You can now restart the HANA instance using HANA Studio.

****************

This completes the HANA DB install.

At the end of this process you should have a running HANA database in which you can execute queries.

It’s possible you can reduce the VM memory allocation to 16GB and the HANA instance will still start (if you remove the XS-Engine).

You should note that we don’t have the HANA Lifecycle Manager installed. You’ll need to complete this if you want to patch this instance. However, for 15mins work, you can re-install!

NOTE: Consider SAP note 1801227 “Change Time Zone if SID is not changed via Config. Tool” v4. The default timezone for the HANA database doesn’t appear to be set correctly.

You can also check/change the Linux O/S timezone in file “/etc/sysconfig/clock”.