If your HANA system is regularly experiencing OOM (Out Of Memory) errors, then there are a number of things that you can do to try and reduce memory consumption.

Tip #1: Partition Large Tables Correctly

If there are large Column Store tables in your HANA system, you should partition them.

Whilst this is an absolute must in a HANA scale-out scenario (for enabling multi-node parallelism), it might not be so obvious that it can also help in a single node HANA system.

Partitioning a column table means that only the required partitions of the table are loaded into memory when accessed, you would think.

Partitioning a large table into smaller chunks will therefore help to reduce the memory usage of the table during SQL queries and also during updates.

During updates, each partition gets its own delta cache area.

Choosing how to partition a table is slightly more difficult and will depend on whether the table is a custom table, SAP standard table or other.

Plus, you will need to know what and how queries or updates are executed against the table. A period of monitoring is suggested, to enable to you collect the required information to be able to make a decision.

One thing you should try to do, is partition the table using the most logical, selective columns.

Read on for a simple example with a twist!

A simple example, a single node HANA system has 1 large column table T1.

The table is partitioned into ranges based on the date column INVOICEDATE:

CREATE COLUMN TABLE “DARRYL”.”T1″ (“INVOICEREF” VARCHAR(1) NOT NULL ,

“INVOICEDATE” DAYDATE CS_DAYDATE NOT NULL ) UNLOAD PRIORITY 5 AUTO MERGE WITH PARAMETERS (‘PARTITION_SPEC’ = ‘RANGE year(INVOICEDATE) 2000-2001,2001-2002,2002-2003,*’)

;

CREATE UNIQUE INDEX “I1” ON “DARRYL”.”T1″ ( “INVOICEREF” ASC ) NONLEAF PARTIAL KEY LENGTH 1;

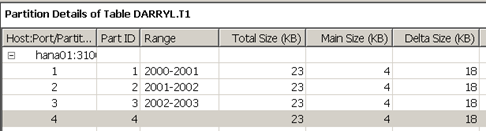

As you can see, I’ve created 3 partitions by year: 2000 to 2001, 2001 to 2002 and 2002 to 2003.

This will actually create 4 partitions: year 2000, year 2001, year 2002 and year <OTHER>.

Insert 5 records into the table:

insert into darryl.t1 (INVOICEREF,INVOICEDATE) values(‘1′,’2000-01-01’)

insert into darryl.t1 (INVOICEREF,INVOICEDATE) values(‘2′,’2001-01-01’)

insert into darryl.t1 (INVOICEREF,INVOICEDATE) values(‘3′,’2002-01-01’)

insert into darryl.t1 (INVOICEREF,INVOICEDATE) values(‘4′,’2003-01-01’)

insert into darryl.t1 (INVOICEREF,INVOICEDATE) values(‘5′,’2004-01-01’)

Inside the Table Distribution tab, you will now see that the records have been inserted according to their values into the respective partitions (see Raw Record Count field on the right):

The last two records for year 2004 and 2003 are in the fourth partition.

You can also see that each partition has a Delta Size, and that the Delta Size for the fourth partition with the most records, is larger than the other partitions.

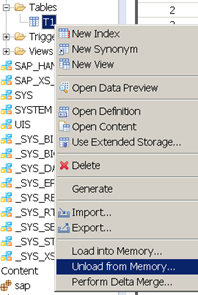

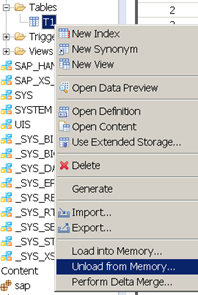

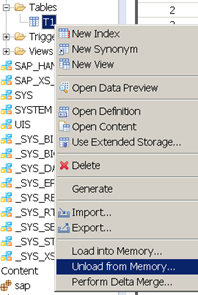

Unload the table from memory:

Refreshing the Table Distribution tab now shows the table partitions to have zero size in memory:

Now select the records for the years 2004 and 2005 only:

select * from darryl.t1 where invoicedate in (‘2004-01-01′,’2003-01-01’)

Refreshing the Table Distribution tab now shows the tables to have non-zero size in memory for ALL partitions!

All of the records from all of the partitions appear to be loaded!

What went wrong?

Well, it’s simple, we didn’t create an index on the column INVOICEDATE.

This forced HANA to scan through the entire table to access the required records, meaning that it needed to load them all into memory.

Let’s create an index in INVOICEDATE:

CREATE UNIQUE INDEX “I1” ON “DARRYL”.”T1″ ( “INVOICEDATE” ASC ) NONLEAF PARTIAL KEY LENGTH 1;

Unload the table from memory:

Refreshing the Table Distribution tab now shows the tables to have zero size in memory:

Now re-execute the SELECT statement:

select * from darryl.t1 where invoicedate in (‘2004-01-01′,’2003-01-01’)

Once again, on the Table Distribution tab, we can see that it has accessed all partitions, AGAIN!:

What went wrong this time? Well, HANA doesn’t yet have any statistics on the table data, so it simply ignored the index.

If you now unload the table from memory once again (we haven’t done anything else):

Now re-execute the SELECT statement:

select * from darryl.t1 where invoicedate in (‘2004-01-01′,’2003-01-01’)

Look at the Table Distribution tab:

You can see that HANA has now only accessed the final partition of the table. The other partitions have not been loaded into memory.

At first I thought this feature might be due to statistics, so I tried removing them from the table T1 (drop statistics on T1;). Then I retried the process of unloading and re-running the query. This had no effect, HANA correctly went straight to the fourth partition.

This left me with one other option, the Plan Cache.

Clearing the Plan Cache using:

ALTER SYSTEM CLEAR SQL PLAN CACHE

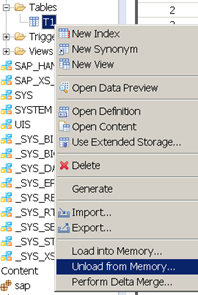

I then re-ran the test by unloading the table from memory:

Re-executing the SQL SELECT:

select * from darryl.t1 where invoicedate in (‘2004-01-01′,’2003-01-01’)

Look at the Table Distribution tab:

Bingo!

The Plan Cache was storing some form of execution plan statistics that meant that it was accessing the fourth partition straight away.

Each time the table is unloaded, the statistics from the existing Plan Cache remain and are re-used upon next execution of the query, which means HANA is able to go straight to the fourth partition.

Summary:

Partitioning is a great way of parallelising access to a table in HANA.

It also serves to help reduce memory by only loading specific partitions into memory when they are required.

In order to effectively use partitioning, you need to partition on an indexed column.

The initial access of a newly partition table with a new index, does not enable the benefits of partition until the second subsequent access of the table due to the Plan Cache. A method/process of pre-loading the execution plan/statistics into the cache is required.